About

I am a 5th year Ph.D. candidate in the Electrical and Computer Engineering Department at the University of Maryland, under supervision of Prof. Sennur Ulukus.

My research interests include wireless communication, federated learning, multi-task learning, semantic communication, and video streaming technologies. Here are some federated learning problems that I am currently working on:

- Personalization

- Statistical Heterogeneity (Non-I.I.D)

- Communication Effeciency

- End-to-end Semantic Communication

- Semantic Multi-user Communication

- Semantic Multi-resolution Communication

News

[Sep. 2023] Our paper "Semantic Multi-Resolution Communications" is accepted to IEEE Global Communications Conference, Kuala Lumpur, Malaysia, December 2023.

[Jan. 2023] Our paper "Personalized decentralized multi-task learning over dynamic communication graph" is accepted to IEEE Conference on Information Sciences and Systems, Baltimore, MD, March 2023.

[Oct. 2022] Our paper "Hierarchical over-the-air FedGradNorm" is accepted to 56th Asilomar Conference on Signals, Systems and Computers, Pacific Grove, CA, October 2022.

[Sep. 2022] Our paper "FedGradNorm: personalized federated gradient- normalized multi-task learning" is accepted to EEE International Workshop on Signal Processing Advances in Wireless Communications, Oulu, Finland, July 2022.

Selected Research

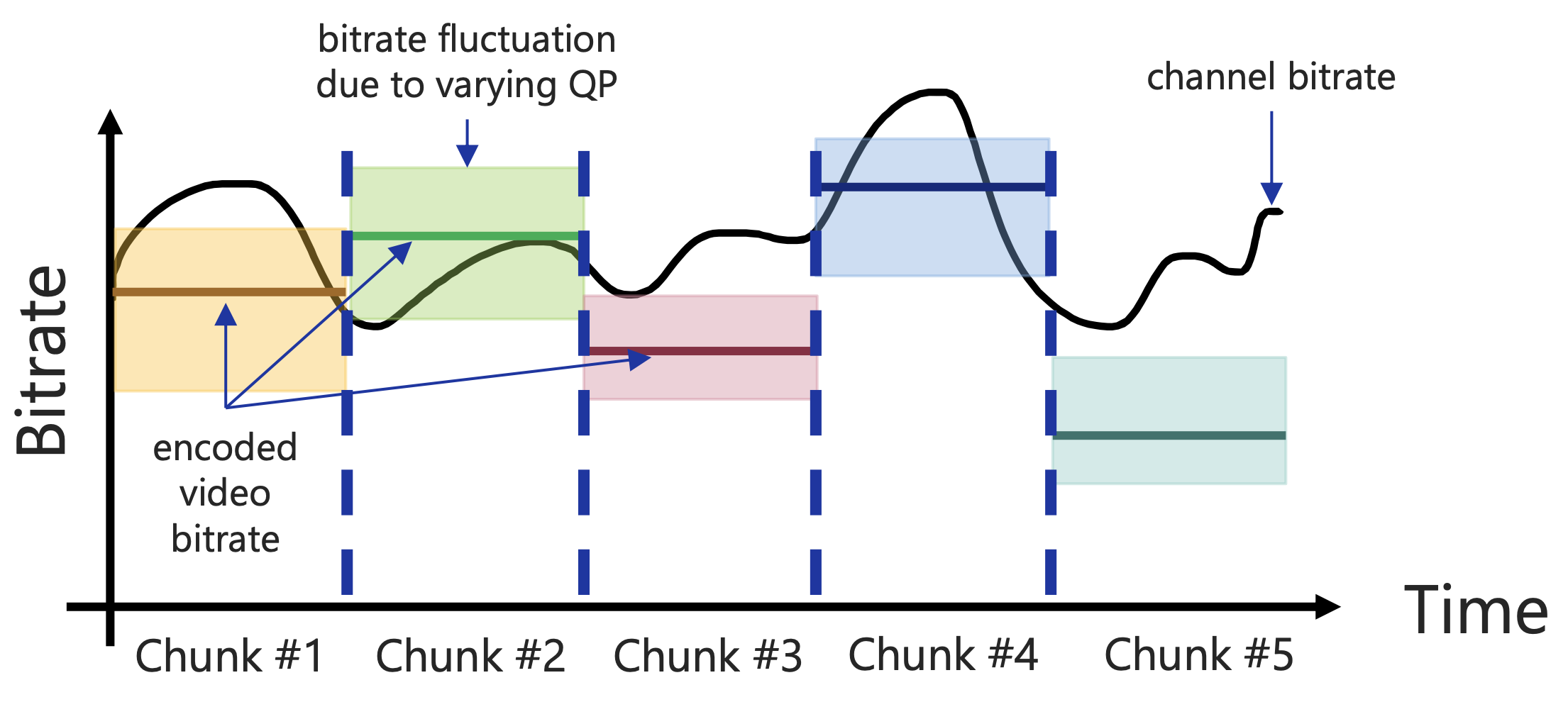

DL-based Video Streaming:

Deep Learning-Based Real-Time Rate Control for Live Streaming on Wireless Networks

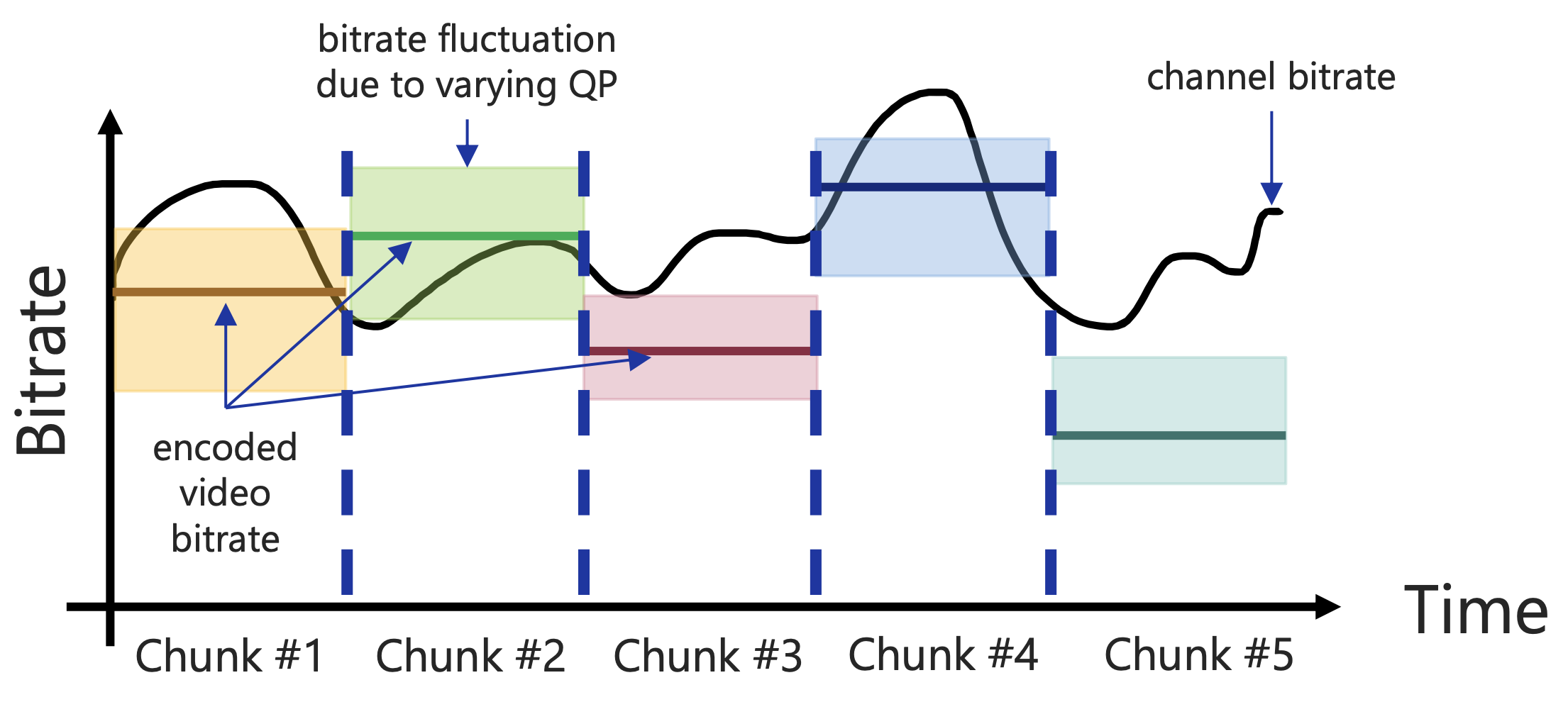

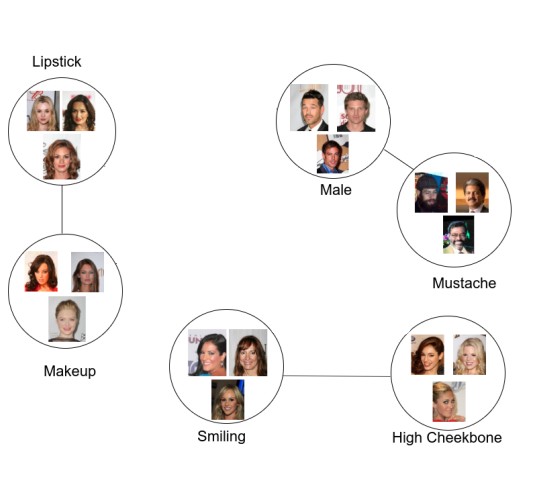

Semantic Communication

Semantic Multi-Resolution Communications

Deep learning based joint source-channel coding (JSCC) has demonstrated significant advancements in data reconstruction compared to separate source-channel coding (SSCC). This superiority arises from the suboptimality of SSCC when dealing with finite block-length data. Moreover, SSCC falls short in reconstructing data in a multi-user and/or multi-resolution fashion, as it only tries to satisfy the worst channel and/or the highest quality data. To overcome these limitations, we propose a novel deep learning multi-resolution JSCC framework inspired by the concept of multi-task learning (MTL). This proposed framework excels at encoding data for different resolutions through hierarchical layers and effectively decodes it by leveraging both current and past layers of encoded data. Moreover, this framework holds great potential for semantic communication, where the objective extends beyond data reconstruction to preserving specific semantic attributes throughout the communication process. These semantic features could be crucial elements such as class labels, essential for classification tasks, or other key attributes that require preservation. Within this framework, each level of encoded data can be carefully designed to retain specific data semantics. As a result, the precision of a semantic classifier can be progressively enhanced across successive layers, emphasizing the preservation of targeted semantics throughout the encoding and decoding stages. We conduct experiments on MNIST and CIFAR10 dataset. The experiment with both datasets illustrates that our proposed method is capable of surpassing the SSCC method in reconstructing data with different resolutions, enabling the extraction of semantic features with heightened confidence in successive layers. This capability is particularly advantageous for prioritizing and preserving more crucial semantic features within the datasets.

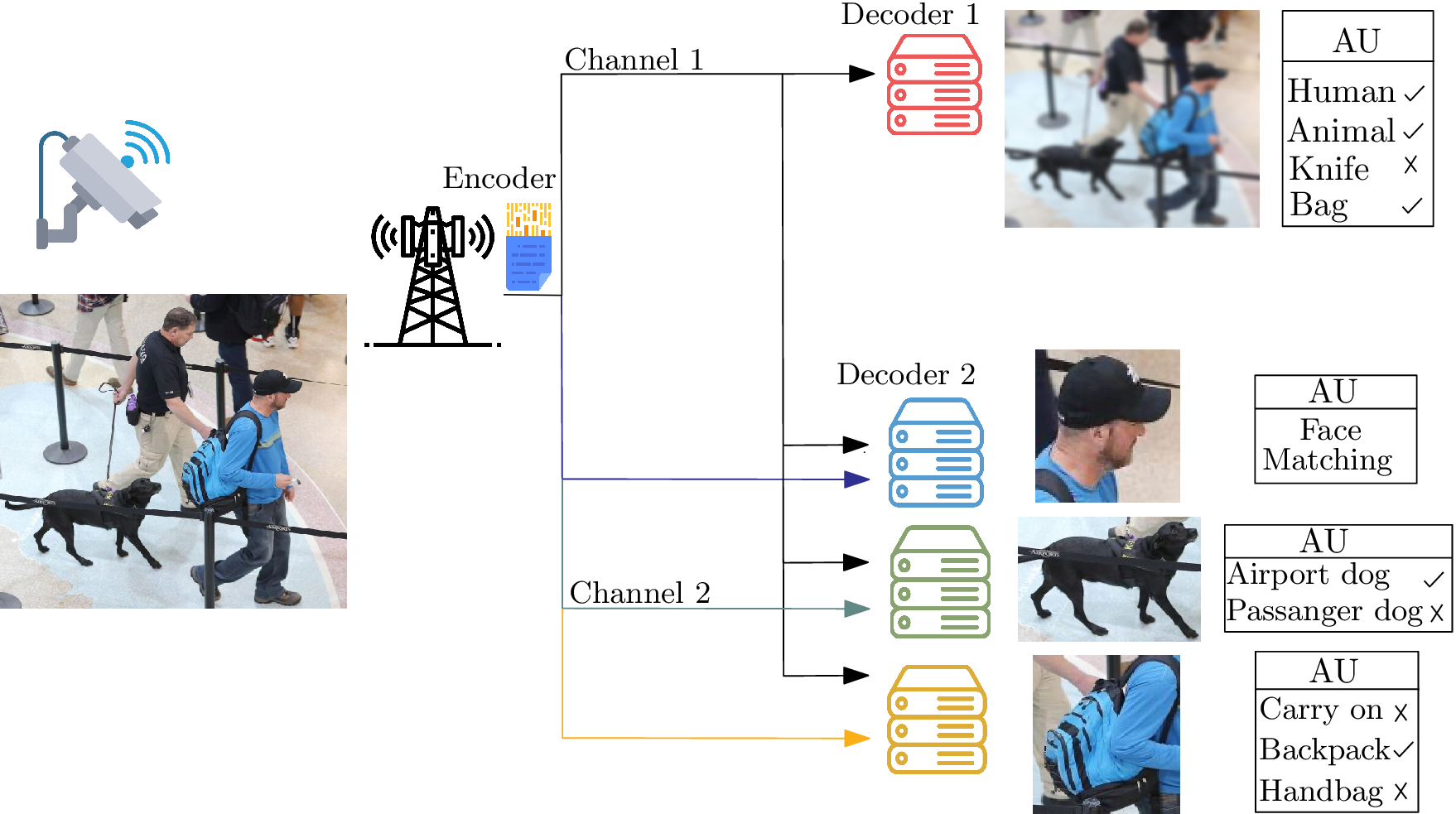

Personalized Federated Learning

Personalized Decentralized Multi-Task Learning Over Dynamic Communication Graphs

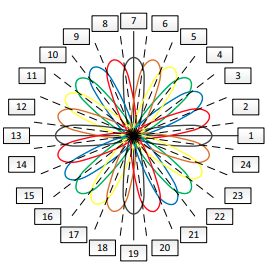

Decentralized and federated learning algorithms face data heterogeneity as one of the biggest challenges, especially when users want to learn a specific task. Even when personalized headers are used concatenated to a shared network (PF-MTL), aggregating all the networks with a decentralized algorithm can result in performance degradation as a result of heterogeneity in the data. Our algorithm uses exchanged gradients to calculate the correlations among tasks automatically, and dynamically adjusts the communication graph to connect mutually beneficial tasks and isolate those that may negatively impact each other. This algorithm improves the learning performance and leads to faster convergence compared to the case where all clients are connected to each other regardless of their correlations. We conduct experiments on a synthetic Gaussian dataset and a large-scale celebrity attributes (CelebA) dataset. The experiment with the synthetic data illustrates that our proposed method is capable of detecting tasks that are positively and negatively correlated. Moreover, the results of the experiments with CelebA demonstrate that the proposed method may produce significantly faster training results than fully-connected networks.

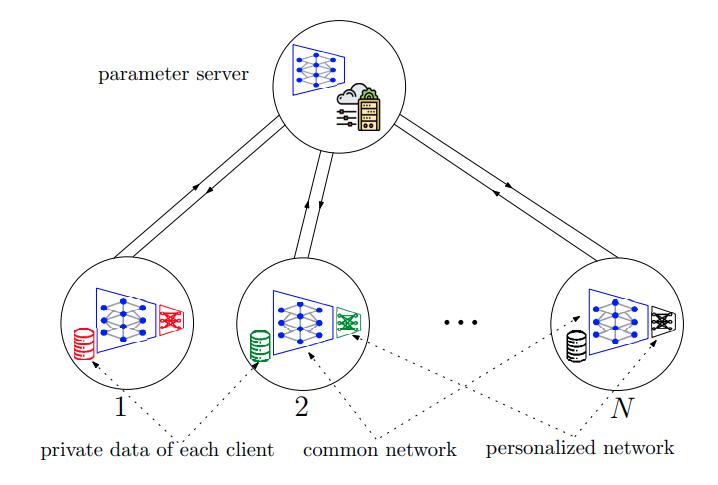

FedGradNorm: Personalized Federated Gradient-Normalized Multi-Task Learning

Multi-task learning (MTL) is a novel framework to learn several tasks simultaneously with a single shared network where each task has its distinct personalized header network for fine-tuning. MTL can be implemented in federated learning settings as well, in which tasks are distributed across clients. In federated settings, the statistical heterogeneity due to different task complexities and data heterogeneity due to non-iid nature of local datasets can both degrade the learning performance of the system. In addition, tasks can negatively affect each other's learning performance due to negative transference effects. To cope with these challenges, we propose FedGradNorm which uses a dynamic-weighting method to normalize gradient norms in order to balance learning speeds among different tasks. FedGradNorm improves the overall learning performance in a personalized federated learning setting. We provide convergence analysis for FedGradNorm by showing that it has an exponential convergence rate. We also conduct experiments on multi-task facial landmark (MTFL) and wireless communication system dataset (RadComDynamic). The experimental results show that our framework can achieve faster training performance compared to equal-weighting strategy. In addition to improving training speed, FedGradNorm also compensates for the imbalanced datasets among clients.

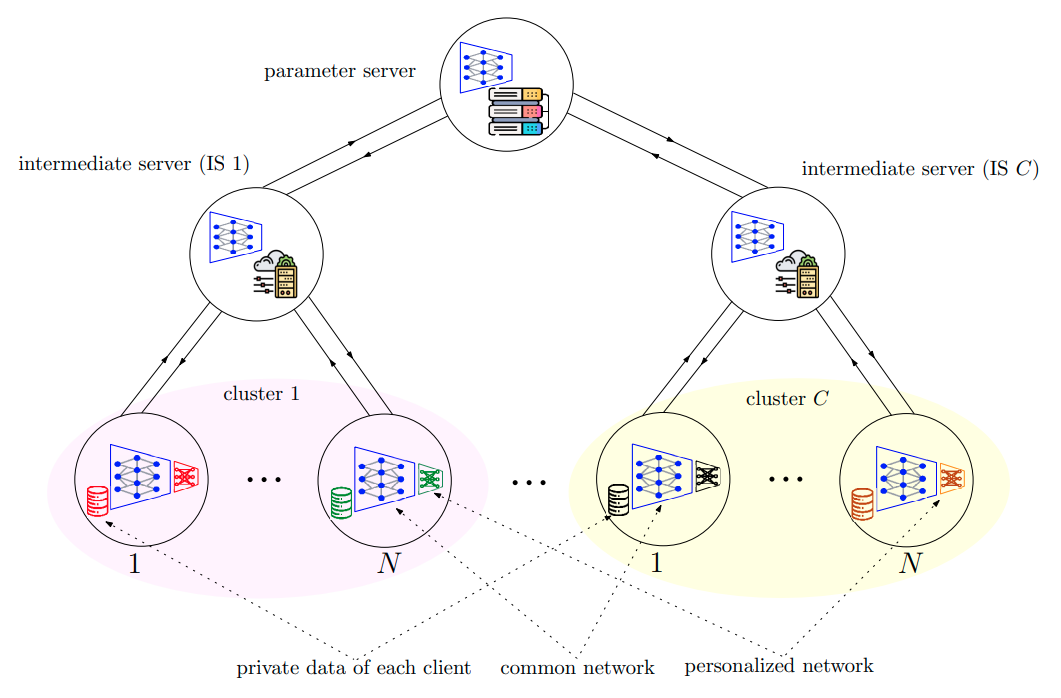

Hierarchical Over-the-Air FedGradNorm

Multi-task learning (MTL) is a learning paradigm to learn multiple related tasks simultaneously with a single shared network where each task has a distinct personalized header network for fine-tuning. MTL can be integrated into a federated learning (FL) setting if tasks are distributed across clients and clients have a single shared network, leading to personalized federated learning (PFL). To cope with statistical heterogeneity in the federated setting across clients which can significantly degrade the learning performance, we use a distributed dynamic weighting approach. To perform the communication between the remote parameter server (PS) and the clients efficiently over the noisy channel in a power and bandwidth-limited regime, we utilize over-the-air (OTA) aggregation and hierarchical federated learning (HFL). Thus, we propose hierarchical over-the-air (HOTA) PFL with a dynamic weighting strategy which we call HOTA-FedGradNorm. Our algorithm considers the channel conditions during the dynamic weight selection process. We conduct experiments on a wireless communication system dataset (RadComDynamic). The experimental results demonstrate that the training speed with HOTA-FedGradNorm is faster compared to the algorithms with a naive static equal weighting strategy. In addition, HOTA-FedGradNorm provides robustness against the negative channel effects by compensating for the channel conditions during the dynamic weight selection process.

Wireless Communication

Beamforming-based random access protocol for massive MIMO systems

Massive multiple-input multiple output (MIMO) systems provide a promising technology for the next generation of wireless communication systems (5G). In the present research, a novel random access (RA) protocol is proposed based on the beamforming properties to admit as many user equipment (UE) as possible, enhance the system performance, and manage the limited number of orthogonal pilots simultaneously. First of all, the proposed RA protocol is studied theoretically to find out formulas for average number of users who will have access to the base station (BS). The result shows the same number of UEs can access the BS as conventional protocols such as multichannel RA solutions. However, in contrary to the conventional protocols, the proposed protocol guaranties that the UEs are located in separate locations, thus, the signal to interference ratio (SINR) of the admitted users are enhanced in multiuser MIMO data transmission phase. A series of enhancements are then proposed to save the orthogonal pilots and to increase the average admitted users significantly. It is shown that, using our novel protocols, we can almost admit as many users as the number of antennas in the BS, using only a fraction of orthogonal pilots needed in conventional protocols.

Publications

Conference Papers

• Transformer-aided semantic communicationsM. Mortaheb , E. Karakaya, M. A. Khojastepour, and S. Ulukus

Submitted to IEEE GLOBECOM (2024)

• Deep learning-based real-time quality control of standard video compression for live streamingM. Mortaheb , M. A. Khojastepour, S. T. Chakradhar, and S. Ulukus

IEEE ICC (2024)

• Deep learning-based real-time rate control for live streaming on wireless networksM. Mortaheb , M. A. Khojastepour, S. T. Chakradhar, and S. Ulukus

IEEE ICMLCN (2024)

• Semantic multi-resolution communicationsM. Mortaheb , M. A. Khojastepour, S. T. Chakradhar, and S. Ulukus

IEEE GLOBECOM (2023)

• Personalized decentralized multi-task learning over dynamic communication graphM. Mortaheb and S. Ulukus

IEEE CISS (2023)

• Hierarchical over-the-air FedGradNormC. Vahapoglu, M. Mortaheb , and S. Ulukus

IEEE ASILOMAR (2022)

• FedGradNorm: personalized federated gradient- normalized multi-task learningM. Mortaheb , C. Vahapoglu, and S. Ulukus

IEEE SPAWC (2022)

Journal Publications

• Personalized federated multi-task learning over wireless fading channels.M. Mortaheb , C. Vahapoglu, and S. Ulukus

MDPI Algorithms (2022)

• Beamforming-based random access protocol for massive MIMO systems.M. Mortaheb and A. Abbasfar

Transactions on Emerging Telecommunications Technologies (2022)

Contact

8223 Paint Branch Dr,College Park, MD,

USA

Email: mortaheb@umd.edu